- Update at 2016-08-05: add kubernets CNI + flannel integration to deploy rc using newly created vlan. add more trouble shooting for vlan ip managment.

Multiple VLAN for Docker cluster

Abstract

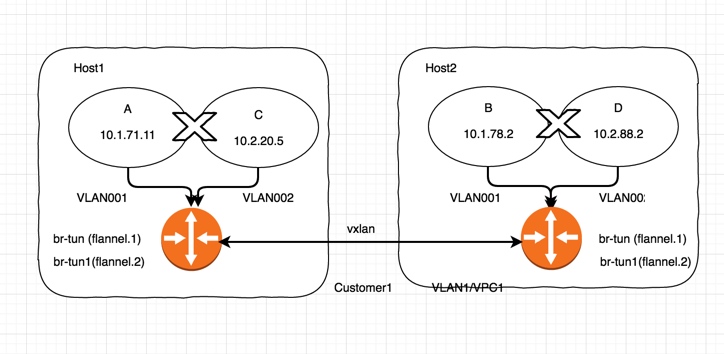

flannel and cni is powerful to enable multiple VxLAN over multiple interface using the same bridge. It simplify complexity of VxLAN configuration of kubernetes cluster or any cluster of docker multiple hosts.

Setup multiple VLAN of flannel

1. Create VLAN setting using multiple flannel bridge

etcdctl set /coreos.com/network/vlan001/config '{ "Network": "10.1.0.0/16", "Backend": { "Type": "vxlan", "VNI": 1 } }'

etcdctl set /coreos.com/network/vlan002/config '{ "Network": "10.2.0.0/16", "Backend": { "Type": "vxlan", "VNI": 2 } }'

etcdctl set /coreos.com/network/vlan003/config '{ "Network": "10.3.0.0/16", "Backend": { "Type": "vxlan", "VNI": 3 } }'

2. Configure master node

/opt/bin/flanneld --etcd-endpoints=http://127.0.0.1:4001 --ip-masq --iface=10.160.61.145 --networks=vlan001,vlan002,vlan003

3. Configure minion node

/opt/bin/flanneld --etcd-endpoints=http://10.160.61.145:4001 --ip-masq --iface=10.122.158.23 --networks=vlan001,vlan002,vlan003

|

|

4. Choose different VLAN for docker daemon

docker -d --bip=${FLANNEL_SUBNET} --mtu=${FLANNEL_MTU}

5. Go further to share the same bridge for multiple interface

Add 2 net interface to the same bridge using the same VNI.

|

|

Conclusion

It is possible to use the same flannel bridge to enable multiple VLAN over multiple interface.

Configure CNI on minion node

Look into more detail about cni

Build plugin of CNI for flannel

|

|

Install CNI and test it

|

|

Setup VLAN001 all hosts

1. Setup CNI for flannel

|

|

2. Modify docker-run1.sh to apply different network configuration for newly created docker container.

|

|

3. Create new container using VLAN001 on both host1 and host2

|

|

4. Result

1. ContainerA on Host1 using vlan001

|

|

2. ContainerB on Host2 using vlan001

|

|

Setup VLAN002 on all hosts

1. Setup CNI setting for flannel

|

|

2. Modify docker-run2.sh to apply different network configuration for newly created docker container.

|

|

3. Create new container using VLAN002 on both host1 and host2

|

|

VLAN002 setup for containerA and ContainerB on different hosts

1. ContainerC on Host1 using vlan002

eth0 Link encap:Ethernet HWaddr FE:07:71:04:74:CD

inet addr:10.2.20.5 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::fc07:71ff:fe04:74cd/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1400 Metric:1

RX packets:11827 errors:0 dropped:0 overruns:0 frame:0

TX packets:11837 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1150970 (1.0 MiB) TX bytes:1151210 (1.0 MiB)

10.2.20.0 * 255.255.255.0 U 0 0 0 br-tun1

2. ContainerD on Host2 using vlan002

eth0 Link encap:Ethernet HWaddr 0E:10:EB:05:36:C4

inet addr:10.2.88.2 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::c10:ebff:fe05:36c4/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1400 Metric:1

RX packets:9786 errors:0 dropped:0 overruns:0 frame:0

TX packets:9691 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:949748 (927.4 KiB) TX bytes:933454 (911.5 KiB)

10.2.88.0 * 255.255.255.0 U 0 0 0 br-tun1

Connection isolation between containers

1. ContainerB reach ContainerA since they are within the same VLAN001 (10.1.0.0/16)

|

|

2. ContainerD reach ContainerC since they are both located in the same VLAN002 (10.2.0.0/16)

|

|

3. containerB does NOT reach containerD even they are placed on the same host due to different VLAN

|

|

4. But ContainerD does NOT reach containerA since they are not in the same VLAN of flannel.

|

|

Configure kubelet to enable CNI+Flannel

The CNI plugin is selected by passing Kubelet the –network-plugin=cni command-line option. Kubelet reads the first CNI configuration file from –network-plugin-dir and uses the CNI configuration from that file to set up each pod’s network. The CNI configuration file must match the CNI specification, and any required CNI plugins referenced by the configuration must be present in /opt/cni/bin.

Configuration file of kublet:

- ubuntu: /etc/default/kubelet

- centos: /etc/sysconf/kubelet

Append following parameters to “KUBELET_OPTS=”

--network-plugin=cni --network-plugin-dir=/etc/cni/net2.dRestart kubelet

service kubelet restartADD/DEL interface to container

./exec-plugins.sh add <container_id> <nspath /proc/PID/ns/net >12NETCONFPATH=/etc/cni/net3.d ./exec-plugins.sh add 35b6274bbaeb /proc/32118/ns/netNETCONFPATH=/etc/cni/net3.d ./exec-plugins.sh del 35b6274bbaeb /proc/32118/ns/netRun an deployment to test the CNI vxlan 10.2.0.0/16, all container running inside the same VLAN could reach each other

kubectl run nginx-eric --image=nginx --replicas=6

|

|

Known limitation

- [x] CNI plugin arguments was issue found on kubelt 1.2.4. Make sure kubelet version => 1.3

- [x] Lack of configurable way to enable multiple VLAN using default cni support of kubelet (master at 7/27/2016 does not support). solution: It is necessary to develop an exec plugin of cni to add and del interface, and extend the spec of yaml.

- [x]

nsenteris missing on ubuntu 14.04, it is default unil-util on centos7. so strongly suggest to choose centos7+ as host rather than ubuntu.

Troubleshooting

- [x] vlan0002 : error executing ADD: no IP addresses available in network: vlan0002

Solution: verify the last reserved ip, check if all IP address are occupied, and clean the IP address and last_reserved_ip.

|

|